The digital world was recently shocked and rocked by the news of the Google Algorithm Leak. On Sunday, May 5, 2024, Rand Fishkin, CEO of SparkToro and founder of Moz, received an email from an anonymous person (not affiliated with Google) claiming to have access to a massive leak of API doc from Google. This leak, believed to have been mistakenly uploaded to GitHub by Google, was subsequently obtained by a non-Googler and shared with Rand Fishkin. While Google has confirmed the authenticity of this leak, they’ve also clarified that the data is incomplete and partially outdated, representing only a fraction of the complete system. This Google Algorithm Leak 2024 is the Biggest Google Search Algorithm Leak in History.

Is this leak a SEO game-changer? In this article, we delve into the details of this Google Algorithm Leak, Top 5 SEO Findings and learnings from this historic Google Search Algorithm Leak to maximise website’s ranking and SEO performance.

Quick Recap of Google API Leak Incident 2024

What exactly happened?

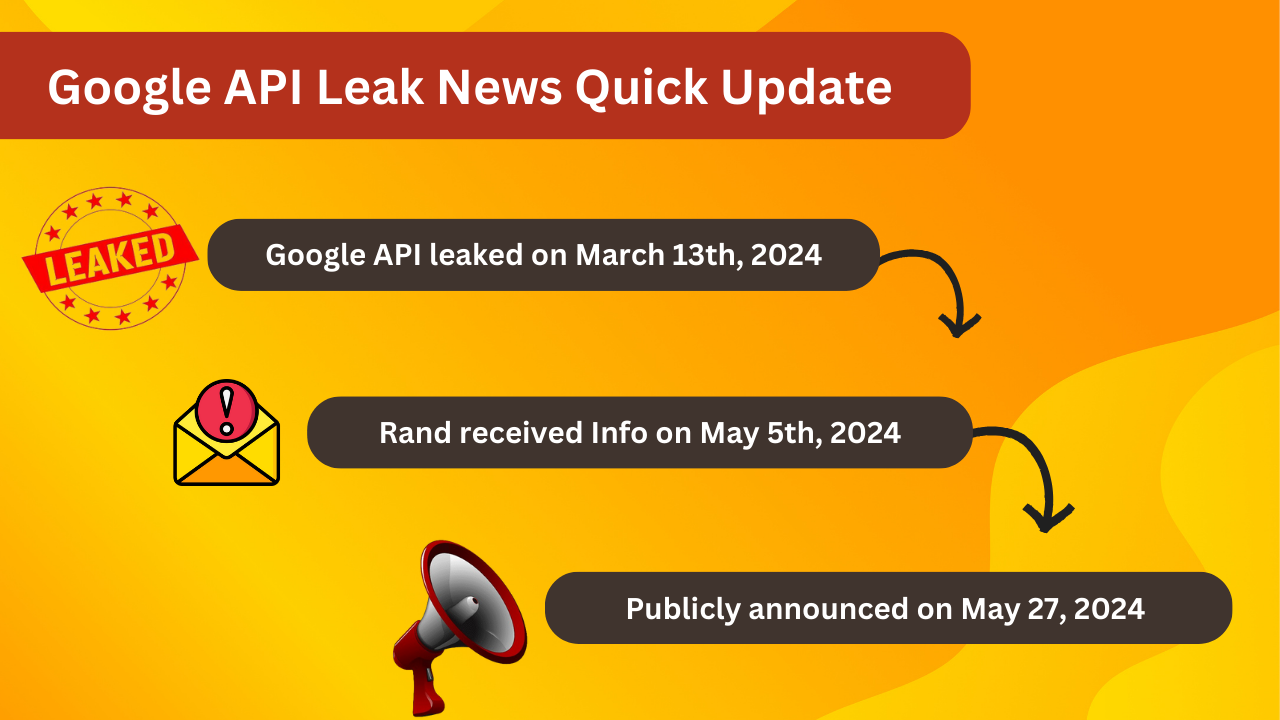

The unfolding of the Google API leak saga began with the emergence of thousands of documents on Github, reportedly sourced from Google’s confidential Content API Warehouse. The API information seems to have been leaked by an automated bot named yoshi-code-bot on March 13th, 2024.

When?

Rand received the information on May 5th, 2024, and released it publicly on May 27th, 2024.

Interestingly, the leaked API information had already made its way onto Github on March 13th, 2024 prior to Rand’s involvement. Because of legal purposes, instead of sharing direct link, here’s a Twitter link to the leaked Google ranking API docs

What’s contained within this Google API Leak?

Diving into the contents of the leak, a staggering 2,596 represented in the API documentation with 14,014 attributes (features) found within this leak, which are considered ranking factors by Google. This extensive dataset provided an unprecedented look into the inner workings of Google’s search engine algorithm.

Who was involved in sharing this Google Algorithm Leak 2024?

2 notable figures Rand Fishkin from SparkToro/Moz and Mike King from iPullRank have brought to light a substantial leak of API documentation known as GoogleApiContentWarehouseV1. After obtaining the data, Rand and Mike analysed some of the data and last week shared their early findings, cross-referencing API findings with patents and statements from Google Search Analysts.

For a direct dive into the primary material, you can access Rand’s post (published on May 27, 2024) and Mike’s post (published on May 27, 2024).

The Google Algorithm Leaked Docs Revealed Some Notable Google Lies:

The leaked API documents shed light on several discrepancies between Google’s public statements and the actual practices underlying its search algorithms. While Google representatives have often denied the use of certain metrics like “domain authority” and dismissed the influence of factors such as clicks on rankings, the documentation reveals otherwise. For instance, despite claims to the contrary, Google does calculate a metric akin to domain authority called “siteAuthority.” Similarly, evidence suggests that clicks and post-click behavior significantly influence rankings, contrary to Google’s assertions. Furthermore, the documentation contradicts Google’s denial of the existence of a sandbox for new websites, as it includes attributes specifically designed to identify and isolate fresh spam. Additionally, despite claims that Chrome data isn’t used for ranking, the leaked documents indicate otherwise, showing the incorporation of Chrome-related attributes into ranking systems. These revelations prompt us to question the reliability of Google’s public statements and emphasize the importance of community experimentation in understanding search algorithms.

Top 5 SEO Findings and Strategic SEO Learnings from the Google Algorithm Leak

Is this leak a SEO game-changer? Here’s what you need to know:

Let’s reveal Google’s Algorithmic Secrets

The leaked documents offer a rare glimpse into the inner workings of Google’s ranking systems, revealing over 14,000 features and ranking signals used in their search engine. These include various PageRank variants, site-wide authority metrics, and critical components such as NavBoost, NSR, and ChardScores. The leaked information provides valuable insights into Google’s ranking factors, emphasizing the importance of adapting SEO strategies accordingly. Now as we’ve armed with this insider knowledge, it’s time to take action. JC Web Pros have concluded Top 5 SEO findings and corresponding strategic SEO Learnings from this historic Google Algorithm Leak.

1) NavBoost

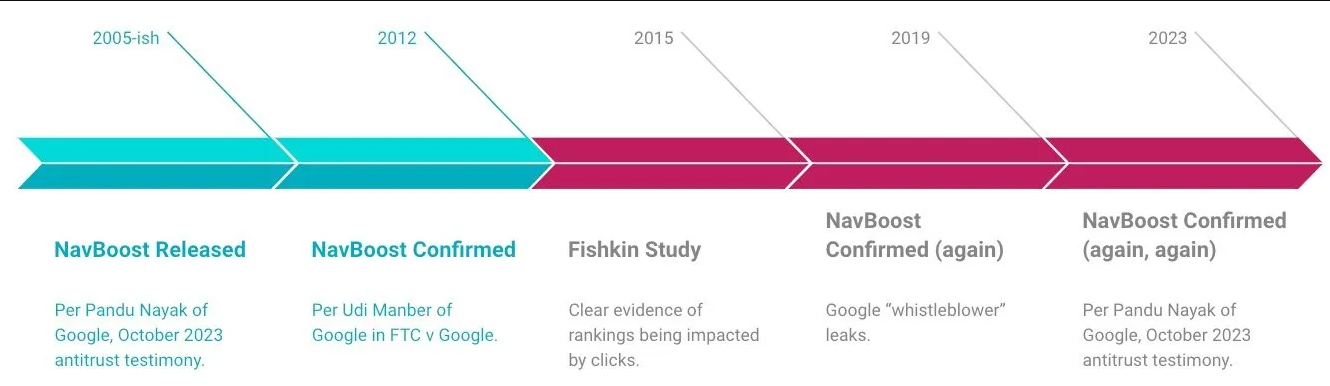

Analysis of Google API leaked data revealed NavBoost, a ranking system analyzing vast clickstream data from Google plugins, Chrome, and Android browsing using cookie history. It indicates factors like GoodClicks and BadClicks, suggesting Google uses signals such as short clicks (bounces) and pogo-sticking (going back and forth between search results until you are satisfied) to determine user satisfaction and adjust search results accordingly.

What we should learn from “NavBoost” Finding?

To win NavBoost’s favor, enhance user engagement metrics like click-through rates (CTR) and time spent on page (so-called “Dwell Time”). SEO has traditionally focused on ranking and clicks, but post-click engagement is increasingly crucial. Providing content that answers queries, loads quickly, and offers excellent UX/UI fosters user retention and deeper engagement.

Google prioritizes pages with positive engagement metrics, indicating the importance of delivering quality content and user experiences. Additionally, brand recognition and trustworthiness can influence click-through rates and rankings, highlighting the value of investing in onsite experiences.

2) Content Update and Freshness

Regular content updates are vital for maintaining relevance and ranking. Google utilizes systems like SegIndexer and TeraGoogle to prioritize content based on update frequency.

- SegIndexer categorizes web pages into tiers according to quality, relevance, and freshness. This tiered approach enables Google to prioritize important and relevant documents, ensuring users receive optimal search results quickly.

- TeraGoogle serves as a secondary indexing system for storing less frequently accessed documents on hard drives. By storing these documents offsite, TeraGoogle enhances the performance and efficiency of Google’s primary index.

What we should learn from “Content Update and Freshness” Finding?

Incorporating periodic content reviews and updates into your SEO strategy is essential. Updating existing content is often more effective than creating new material from scratch. For example, managing a financial advice blog involves updating articles to reflect current market trends and regulatory changes, which can even be automated. This signals to Google that your site is regularly maintained and up-to-date, boosting its ranking potential.

Updated content demonstrates a commitment to providing accurate information, bolstering your site’s credibility and reliability.

3) Site-Wide Authority Metrics

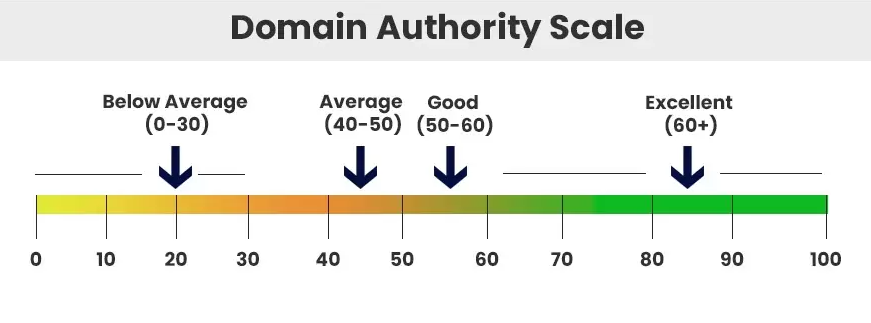

Site-wide authority metrics are crucial signals for Google’s assessment of overall website quality and relevance, rather than just individual pages. Despite Google’s denials, leaked documents confirm the existence of “siteAuthority,” measuring a site’s authority on specific topics. This metric, along with Host NSR (Host-Level Site Rank), evaluates different sections of a domain to ensure consistent quality. Surprisingly, Google uses Chrome browser data to gauge site authority, contradicting previous statements. This emphasizes the significance of user engagement data in assessing site quality.

What we should learn from this siteAuthority Funding?

siteAuthority and Host NSR metrics evaluate the overall quality and relevance of entire websites, considering factors such as content quality, user engagement, and link profiles. Understanding and optimizing for these metrics is essential for improving search visibility and credibility.

4) NSR (Neural Search Ranking)

NSR (Neural Search Ranking) is a crucial part of Google’s algorithm that utilizes machine learning to understand the context and relevance of web content, enabling more accurate search results. This advanced system helps Google interpret the semantic meaning behind the text, enabling more accurate and contextually appropriate search results.

For example, you write a detailed guide on “Exploring the Best Places of India,” which covers various aspects such as list of places, visitor tips, travelling guide, vehicle convenience, nearby hotels and restaurants, and personal advice from travelers. NSR analyzes your content to determine its relevance based on keyword matching and how well it answers user queries related to exploring India.

What we should learn from NSR (Neural Search Ranking) Finding?

NSR’s effectiveness underscores that simply stuffing an article with keywords is not enough; your content needs richness, structure, and semantic depth. If a competing article offers a broader array of content, such as interactive maps, video tours, and current visitor reviews, NSR is likely to prioritize it for its comprehensive appeal and enhanced user utility.

Prioritize crafting content that goes beyond keyword optimization, aiming for depth, relevance, engagement, richness, structure, and semantic significance. By doing so, you enhance the user experience and align with the requirements of Google’s neural search capabilities.

5) ChardScores:

ChardScores evaluate website and page quality by analyzing content depth, user engagement, and emphasizing the need of maintaining high standards throughout all pages. These scores incorporate various metrics, including user engagement and content depth, offering a comprehensive assessment of a site’s caliber.

What we should learn from ChardScores Finding?

Consistently deliver high-quality content that maintains high user engagement and content depth metrics to ensure a higher ChardScore. For example, your website is all about Wealth management and you publish daily news on financial marketing, wealth management tips, stocks, retirement planning, and in-depth interviews with industry experts. Each article is well-researched and enhanced with interactive charts, high-quality visuals (images and videos). This type of content engages and ultimately helps in boosting ChardScore

Conclusion

The Google algorithm leak of 2024 has unveiled critical insights into the complex nature of search engine ranking algorithms. As SEO practitioners, it is essential to stay informed, adapt strategies, and embrace continuous learning to navigate the evolving landscape of search engine optimization successfully. The leaked information presents an opportunity to reassess and refine SEO practices, ultimately driving meaningful results for businesses in the digital realm.

Share This Post :